Table of Links

- Abstract and Introduction

- SylloBio-NLI

- Empirical Evaluation

- Related Work

- Conclusions

- Limitations and References

A. Formalization of the SylloBio-NLI Resource Generation Process

B. Formalization of Tasks 1 and 2

C. Dictionary of gene and pathway membership

D. Domain-specific pipeline for creating NL instances and E Accessing LLMs

H. Prompting LLMs - Zero-shot prompts

I. Prompting LLMs - Few-shot prompts

J. Results: Misaligned Instruction-Response

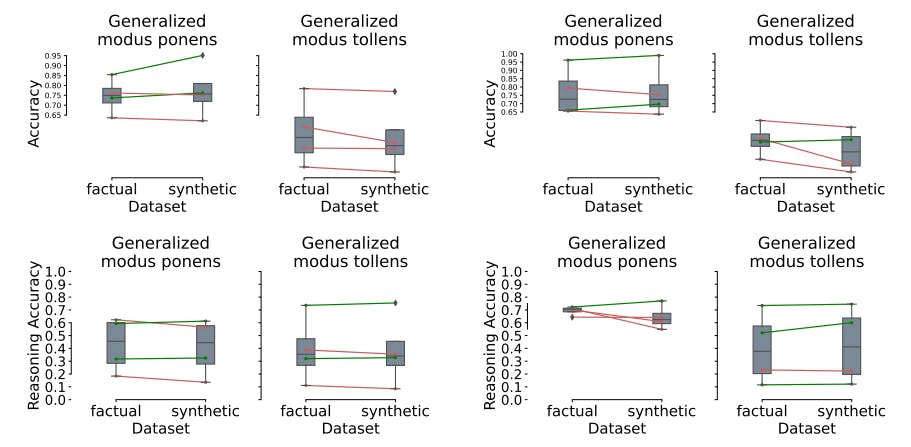

K. Results: Ambiguous Impact of Distractors on Reasoning

L. Results: Models Prioritize Contextual Knowledge Over Background Knowledge

M Supplementary Figures and N Supplementary Tables

6 Limitations

A key challenge for scaling our approach to different domains is its dependency on high-quality external ontologies and knowledge bases. This factor limits the scope of our analyses across biomedical domains. More efficient methods for populating the natural language syllogistic arguments could be investigated in future work, involving automated NLP methods, such as those used in RepoDB Brown and Patel [2017], MSI, Hetionet Himmelstein et al. [2017], DrugMechDB Gonzalez-Cavazos et al. [2023], and INDRA Gyori et al. [2017], Bachman et al. [2023], or synthetic data generation methods coupled with efficient quality checks. However, these approaches still face challenges in balancing precision and generalization, particularly for complex reasoning tasks in biomedicine. Further improvements are necessary to develop scalable resources and more adaptable NLP techniques for real-world applications.

References

Bill MacCartney and Christopher D Manning. An extended model of natural logic. In Proceedings of the eighth international conference on computational semantics, pages 140–156. Association for Computational Linguistics, 2009.

Yongkang Wu, Meng Han, Yutao Zhu, Lei Li, Xinyu Zhang, Ruofei Lai, Xiaoguang Li, Yuanhang Ren, Zhicheng Dou, and Zhao Cao. Hence, socrates is mortal: A benchmark for natural language syllogistic reasoning. In Anna Rogers, Jordan Boyd-Graber, and Naoaki Okazaki, editors, Findings of the Association for Computational Linguistics: ACL 2023, pages 2347–2367, Toronto, Canada, July 2023. Association for Computational Linguistics. doi:10.18653/v1/2023.findings-acl.148. URL https://aclanthology.org/2023.findings-acl.148.

Mael Jullien, Marco Valentino, Hannah Frost, Paul O’Regan, Dónal Landers, and Andre Freitas. NLI4CT: Multievidence natural language inference for clinical trial reports. In Houda Bouamor, Juan Pino, and Kalika Bali, editors, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 16745–16764, Singapore, December 2023a. Association for Computational Linguistics. doi:10.18653/v1/2023.emnlp-main.1041. URL https://aclanthology.org/2023.emnlp-main.1041.

Mael Jullien, Marco Valentino, Hannah Frost, Paul O’Regan, Dónal Landers, and Andre Freitas. NLI4CT: Multievidence natural language inference for clinical trial reports. In Houda Bouamor, Juan Pino, and Kalika Bali, editors, Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 16745–16764, Singapore, December 2023a. Association for Computational Linguistics. doi:10.18653/v1/2023.emnlp-main.1041. URL https://aclanthology.org/2023.emnlp-main.1041.

Maël Jullien, Marco Valentino, Hannah Frost, Paul O’regan, Donal Landers, and André Freitas. SemEval-2023 task 7: Multi-evidence natural language inference for clinical trial data. In Atul Kr. Ojha, A. Seza Dogruöz, Giovanni ˘ Da San Martino, Harish Tayyar Madabushi, Ritesh Kumar, and Elisa Sartori, editors, Proceedings of the 17th International Workshop on Semantic Evaluation (SemEval-2023), pages 2216–2226, Toronto, Canada, July 2023b. Association for Computational Linguistics. doi:10.18653/v1/2023.semeval-1.307. URL https://aclanthology. org/2023.semeval-1.307.

Tiwalayo Eisape, Michael Tessler, Ishita Dasgupta, Fei Sha, Sjoerd Steenkiste, and Tal Linzen. A systematic comparison of syllogistic reasoning in humans and language models. In Kevin Duh, Helena Gomez, and Steven Bethard, editors, Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), pages 8425–8444, Mexico City, Mexico, June 2024. Association for Computational Linguistics. doi:10.18653/v1/2024.naacl-long.466. URL https://aclanthology. org/2024.naacl-long.466.

Mael Jullien, Marco Valentino, and André Freitas. SemEval-2024 task 2: Safe biomedical natural language inference for clinical trials. In Atul Kr. Ojha, A. Seza Dogruöz, Harish Tayyar Madabushi, Giovanni Da San Martino, ˘ Sara Rosenthal, and Aiala Rosá, editors, Proceedings of the 18th International Workshop on Semantic Evaluation (SemEval-2024), pages 1947–1962, Mexico City, Mexico, June 2024. Association for Computational Linguistics. doi:10.18653/v1/2024.semeval-1.271. URL https://aclanthology.org/2024.semeval-1.271.

Geonhee Kim, Marco Valentino, and André Freitas. A mechanistic interpretation of syllogistic reasoning in autoregressive language models. arXiv preprint arXiv:2408.08590, 2024.

Fei Yu, Hongbo Zhang, Prayag Tiwari, and Benyou Wang. Natural language reasoning, a survey, 2023. URL https://arxiv.org/abs/2303.14725.

Zehui Zhao, Laith Alzubaidi, Jinglan Zhang, Ye Duan, and Yuantong Gu. A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations. Expert Systems with Applications, 242:122807, 2024. ISSN 0957-4174. doi:https://doi.org/10.1016/j.eswa.2023.122807. URL https://www.sciencedirect.com/science/article/pii/S0957417423033092.

Ian Porada, Alessandro Sordoni, and Jackie Cheung. Does pre-training induce systematic inference? how masked language models acquire commonsense knowledge. In Marine Carpuat, Marie-Catherine de Marneffe, and Ivan Vladimir Meza Ruiz, editors, Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 4550–4557, Seattle, United States, July 2022. Association for Computational Linguistics. doi:10.18653/v1/2022.naacl-main.337. URL https://aclanthology.org/2022.naacl-main.337.

Magdalena Wysocka, Oskar Wysocki, Maxime Delmas, Vincent Mutel, and André Freitas. Large language models, scientific knowledge and factuality: A framework to streamline human expert evaluation. Journal of Biomedical Informatics, page 104724, 2024.

David Croft, Antonio Fabregat, Kristina S Keays, Mark Rutherford, Robin HW Van Iersel, Esteban D’Eustachio, Henning Hermjakob, Lincoln Stein, and Peter D’Eustachio. Reactome: a knowledgebase of biological pathways. Nucleic acids research, 42(D1):D472–D477, 2014.

Gregor Betz, Christian Voigt, and Kyle Richardson. Critical thinking for language models. In Proceedings of the 14th International Conference on Computational Semantics (IWCS), pages 63–75. Association for Computational Linguistics, 2021. URL https://aclanthology.org/2021.iwcs-1.7.

Gang Fang, Wen Wang, Vanja Paunic, Hamed Heydari, Michael Costanzo, Xiaoye Liu, Xiaotong Liu, Benjamin VanderSluis, Benjamin Oately, Michael Steinbach, et al. Discovering genetic interactions bridging pathways in genome-wide association studies. Nature communications, 10(1):4274, 2019.

Jakob Prange, Khalil Mrini, and Noah A. Smith. Challenges and opportunities in nlp for systematic generalization: Beyond compositionality. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics, 2023.

Emily Pitler, Chris Callison-Burch, and Ellie Pavlick. The logic of learning: Syllogistic reasoning in modern ai systems. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics. Association for Computational Linguistics, 2023.

Albert Q Jiang, Alexandre Sablayrolles, Arthur Mensch, Chris Bamford, Devendra Singh Chaplot, Diego de las Casas, Florian Bressand, Gianna Lengyel, Guillaume Lample, Lucile Saulnier, et al. Mistral 7b. arXiv preprint arXiv:2310.06825, 2023.

AI Team Mistral. Mixtral of experts - a high quality sparse mixture-of-experts, 2023. URL https://mistral.ai/ news/mixtral-of-experts/.

Team Gemma and Deepmind Google. Gemma: Open models based on gemini research and technology, 2024. URL https://storage.googleapis.com/deepmind-media/gemma/gemma-report.pdf.

AI@Meta. Llama 3 model card, 2024. URL https://github.com/meta-llama/llama3/blob/main/MODEL_ CARD.md.

Yanis Labrak, Adrien Bazoge, Emmanuel Morin, Pierre-Antoine Gourraud, Mickael Rouvier, and Richard Dufour. Biomistral: A collection of open-source pretrained large language models for medical domains, 2024.

Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. BERT: Pre-training of deep bidirectional transformers for language understanding. In Jill Burstein, Christy Doran, and Thamar Solorio, editors, Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics. doi:10.18653/v1/N19-1423. URL https://aclanthology.org/ N19-1423.

Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: A robustly optimized bert pretraining approach. ArXiv, abs/1907.11692, 2019. URL https://api.semanticscholar.org/CorpusID:198953378.

Samuel R. Bowman, Gabor Angeli, Christopher Potts, and Christopher D. Manning. A large annotated corpus for learning natural language inference. In Lluís Màrquez, Chris Callison-Burch, and Jian Su, editors, Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pages 632–642, Lisbon, Portugal, September 2015. Association for Computational Linguistics. doi:10.18653/v1/D15-1075. URL https://aclanthology.org/D15-1075.

Adina Williams, Nikita Nangia, and Samuel Bowman. A broad-coverage challenge corpus for sentence understanding through inference. In Marilyn Walker, Heng Ji, and Amanda Stent, editors, Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), pages 1112–1122, New Orleans, Louisiana, June 2018. Association for Computational Linguistics. doi:10.18653/v1/N18-1101. URL https://aclanthology.org/N18-1101.

Leonardo Bertolazzi, Albert Gatt, and Raffaella Bernardi. A systematic analysis of large language models as soft reasoners: The case of syllogistic inferences, 2024. URL https://arxiv.org/abs/2406.11341.

Hanmeng liu, Zhiyang Teng, Ruoxi Ning, Jian Liu, Qiji Zhou, and Yue Zhang. Glore: Evaluating logical reasoning of large language models, 2023. URL https://arxiv.org/abs/2310.09107.

Jason Wei, Xuezhi Wang, Dale Schuurmans, Maarten Bosma, Brian Ichter, Fei Xia, Ed Chi, Quoc Le, and Denny Zhou. Chain-of-thought prompting elicits reasoning in large language models, 2023. URL https://arxiv.org/abs/ 2201.11903.

Jie Huang and Kevin Chen-Chuan Chang. Towards reasoning in large language models: A survey. In Anna Rogers, Jordan Boyd-Graber, and Naoaki Okazaki, editors, Findings of the Association for Computational Linguistics: ACL 2023, pages 1049–1065, Toronto, Canada, July 2023. Association for Computational Linguistics. doi:10.18653/v1/2023.findings-acl.67. URL https://aclanthology.org/2023.findings-acl.67.

Ishita Dasgupta, Andrew K. Lampinen, Stephanie C. Y. Chan, Hannah R. Sheahan, Antonia Creswell, Dharshan Kumaran, James L. McClelland, and Felix Hill. Language models show human-like content effects on reasoning tasks, 2024. URL https://arxiv.org/abs/2207.07051.

Adam S Brown and Chirag J Patel. A standard database for drug repositioning. Scientific data, 4(1):1–7, 2017.

Daniel Scott Himmelstein, Antoine Lizee, Christine Hessler, Leo Brueggeman, Sabrina L Chen, Dexter Hadley, Ari Green, Pouya Khankhanian, and Sergio E Baranzini. Systematic integration of biomedical knowledge prioritizes drugs for repurposing. Elife, 6:e26726, 2017.

Adriana Carolina Gonzalez-Cavazos, Anna Tanska, Michael Mayers, Denise Carvalho-Silva, Brindha Sridharan, Patrick A Rewers, Umasri Sankarlal, Lakshmanan Jagannathan, and Andrew I Su. Drugmechdb: A curated database of drug mechanisms. Scientific Data, 10(1):632, 2023.

Benjamin M Gyori, John A Bachman, Kartik Subramanian, Jeremy L Muhlich, Lucian Galescu, and Peter K Sorger. From word models to executable models of signaling networks using automated assembly. Molecular systems biology, 13(11):954, 2017.

John A Bachman, Benjamin M Gyori, and Peter K Sorger. Automated assembly of molecular mechanisms at scale from text mining and curated databases. Molecular Systems Biology, 19(5):e11325, 2023.

Authors:

(1) Magdalena Wysocka, National Biomarker Centre, CRUK-MI, Univ. of Manchester, United Kingdom;

(2) Danilo S. Carvalho, National Biomarker Centre, CRUK-MI, Univ. of Manchester, United Kingdom and Department of Computer Science, Univ. of Manchester, United Kingdom;

(3) Oskar Wysocki, National Biomarker Centre, CRUK-MI, Univ. of Manchester, United Kingdom and ited Kingdom 3 I;

(4) Marco Valentino, Idiap Research Institute, Switzerland;

(5) André Freitas, National Biomarker Centre, CRUK-MI, Univ. of Manchester, United Kingdom, Department of Computer Science, Univ. of Manchester, United Kingdom and Idiap Research Institute, Switzerland.

This paper is available on arxiv under CC BY-NC-SA 4.0 license.