Authors:

(1) Samson Yu, Dept. of Computer Science, National University of Singapore ([email protected]);

(2) Kelvin Lin. Dept. of Computer Science, National University of Singapore;

(3) Anxing Xiao, Dept. of Computer Science, National University of Singapore;

(4) Jiafei Duan, University of Washington;

(5) Harold Soh, Dept. of Computer Science, National University of Singapore and NUS Smart Systems Institute ([email protected]).

Table of Links

- Abstract and I. Introduction

- II. Related Work

- III. PhysiClear - Tactile and Physical Understanding Training & Evaluation Suite

- IV. Octopi - Vision-Language Property-Guided Physical Reasoning

- V. Experimental Setup

- VI. Experimental Results

- VII. Ablations

- VIII. Conclusion and Discussion, Acknowledgements, and References

- Appendix for Octopi: Object Property Reasoning with Large Tactile-Language Models

- APPENDIX A: ANNOTATION DETAILS

- APPENDIX B: OBJECT DETAILS

- APPENDIX C: PROPERTY STATISTICS

- APPENDIX D: SAMPLE VIDEO STATISTICS

- APPENDIX E: ENCODER ANALYSIS

- APPENDIX F: PG-INSTRUCTBLIP AVOCADO PROPERTY PREDICTION

- Appendix for Octopi: Object Property Reasoning with Large Tactile-Language Models

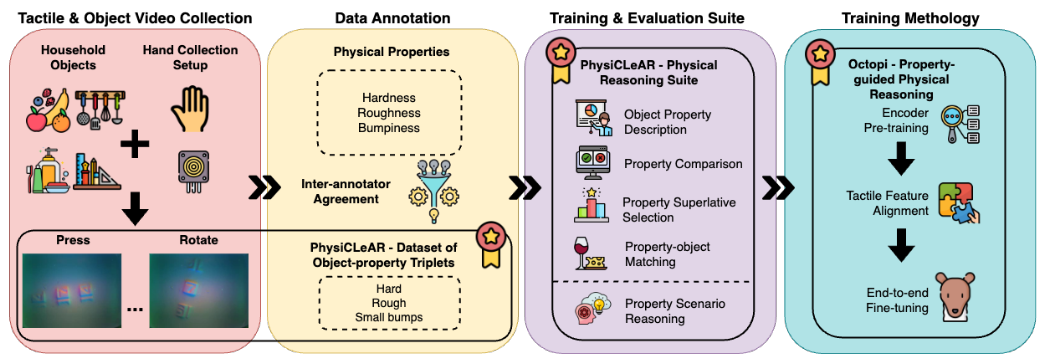

Abstract—Physical reasoning is important for effective robot manipulation. Recent work has investigated both vision and language modalities for physical reasoning; vision can reveal information about objects in the environment and language serves as an abstraction and communication medium for additional context. Although these works have demonstrated success on a variety of physical reasoning tasks, they are limited to physical properties that can be inferred from visual or language inputs. In this work, we investigate combining tactile perception with language, which enables embodied systems to obtain physical properties through interaction and apply commonsense reasoning. We contribute a new dataset PHYSICLEAR, which comprises both physical/property reasoning tasks and annotated tactile videos obtained using a GelSight tactile sensor. We then introduce OCTOPI, a system that leverages both tactile representation learning and large vision-language models to predict and reason about tactile inputs with minimal language fine-tuning. Our evaluations on PHYSICLEAR show that OCTOPI is able to effectively use intermediate physical property predictions to improve its performance on various tactile-related tasks. PHYSICLEAR and OCTOPI are available at https://github.com/clear-nus/octopi.

I. INTRODUCTION

This paper extends LVLMs to have the sense of touch. We posit that incorporating a tactile modality into LVLMs will enable better physical reasoning in real-world environments. As an example, Fig. 1 illustrates how commonsense knowledge is applied together with tactile information to complete a novel physical task. Here, the robot leverages its tactile inputs together with the LLM’s commonsense knowledge (that ripe avocados are soft) to correctly select the ripe avocado. We use visual-tactile sensors, i.e., the GelSight [60], which provides image frames that reveal physical object properties such as texture and hardness [59]. However, there remains a significant domain gap between natural images that typical LVLMs are trained with and the tactile data.

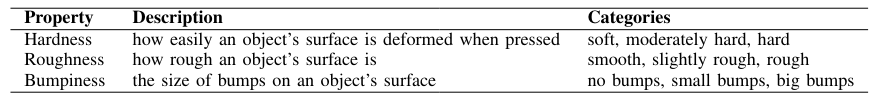

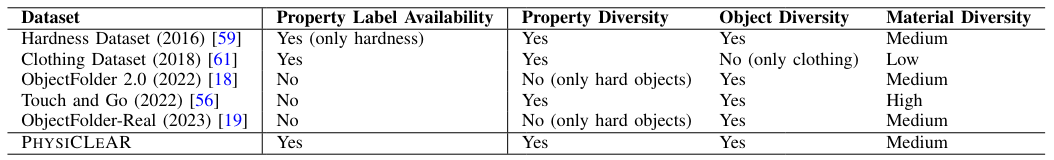

To bridge this gap, we contribute the PHYSICLEAR dataset, which comprises GelSight images on a variety of real world objects, along with object labels and part annotations. PHYSICLEAR complements existing tactile datasets [59, 61, 18, 56, 19] as it provides three physical property annotations, specifically hardness, roughness, and bumpiness, that have been used in prior research [43, 20, 38, 10, 5, 26] and can be potentially inferred from the GelSight data. PHYSICLEAR also includes an training and evaluation suite comprising five reasoning tasks, which can serve as a benchmark for the research community.

Using PHYSICLEAR, we develop OCTOPI (Object Comprehension with Tactile Observations for Physical Intelligence). OCTOPI is a LLaMA-based [49, 50] LVLM (Vicuna [11]) equipped with a CLIP-based [39] tactile encoder, whose representations have been aligned via projection. In experiments, we show that OCTOPI is able to use its tactile modality to predict object properties and reason about scenarios including avocado ripeness.

Contributions. In summary, this paper makes the following key contributions:

• A new GelSight dataset, PHYSICLEAR, that exhibits property diversity, object diversity, and material diversity for selected physical properties.

• OCTOPI, a framework for physical reasoning that leverages vision-based tactile sensors and the commonsense reasoning capabilities of LLMs.

• An accompanying training and evaluation suite spanning five tasks and baseline results using OCTOPI.

We hope that PHYSICLEAR and OCTOPI will spur research in tactile-enabled physical reasoning for embodied AI systems [14].

This paper is available on arxiv under CC BY 4.0 DEED license.